Explainable AI: Ensuring Transparency and Trust in Small Business Applications

As you integrate AI-driven systems into your small business operations, it’s vital to verify that these systems are transparent, trustworthy, and alined with your business values and goals. Explainable AI (XAI) techniques help uncover hidden biases and errors, providing transparent decision-making processes that aline with your business values. By implementing XAI, you can optimise business decisions, identify areas for improvement, and guaranty accountability. With transparent models, you’ll build trust with stakeholders and certify alinement with your business goals. Now, take the next step in verifying transparency and trust in your small business applications.

Key Takeaways

• Implementing XAI in small business operations optimises business decisions by gaining transparency into AI-driven insights, enhancing operational efficiency.• Transparent AI decision-making processes guaranty alinement with business values and goals, ensuring accountability and preventing biases or errors.• XAI helps uncover hidden patterns and relationships in data, enabling informed decisions that drive growth and profitability in small businesses.• Model explainability metrics provide quantitative measures of a model’s transparency, building trust with stakeholders and ensuring fairness and reliability.• Explainable AI enables the verification of AI systems’ fairness, transparency, and trustworthiness, mitigating risks associated with autonomous systems.

Demystifying AI Decision-Making Processes

As you explore the intricacies of AI systems, understanding the decision-making processes behind their outputs becomes imperative, particularly when dealing with high-stakes applications.

In the domain of AI ethics, demystifying these processes is pivotal to achieve transparency and accountability. You must recognise that AI systems aren’t infallible and can perpetuate biases or make erroneous decisions without human oversight.

To address this, implementing mechanisms that facilitate explicability is necessary to allow you to peek under the hood of AI decision-making. This involves developing techniques that provide insights into the reasoning behind AI-driven outputs.

By doing so, you can identify potential pitfalls and biases, guaranteeing that AI systems aline with your values and goals.

In high-stakes applications, human oversight becomes paramount. You must have the ability to scrutinise and correct AI-driven decisions, especially when they involve critical consequences.

This fusion of human judgement and AI capabilities enables more informed decision-making, mitigating the risks associated with autonomous systems.

Uncovering Hidden Biases and Errors

You can deploy explainable AI techniques to uncover hidden biases and errors in AI decision-making processes, which is vital in high-stakes applications where flawed outputs can have severe consequences.

By doing so, you can verify that your AI systems are fair, transparent, and trustworthy.

Explainable AI enables you to dig deeper into your AI models, identifying biases and errors that could be detrimental to your business.

This is particularly important in applications where AI-driven decisions can have a significant impact on people’s lives, such as in healthcare or finance.

To uncover hidden biases and errors, you can employ various techniques, including:

- Data Forensics: This involves analysing your data to identify potential sources of bias and error.

By examining your data, you can identify patterns and anomalies that may indicate bias or errors.

- Error Profiling: This technique involves creating a profile of errors made by your AI model, allowing you to identify areas where the model is prone to mistakes.

By analysing these errors, you can refine your model to improve its accuracy and fairness.

- Model Interpretability: This involves designing your AI models to provide clear explanations for their decisions, making it easier to identify biases and errors.

XAI in Small Business Operations

As you integrate XAI into your small business operations, you’ll be able to optimise your business decisions by gaining transparency into AI-driven insights.

By leveraging XAI, you’ll enhance operational efficiency by identifying areas where AI can automate tasks, freeing up resources for more strategic initiatives.

Optimising Business Decisions

By integrating explainable AI into their operations, small businesses can uncover hidden patterns and relationships in their data, enabling them to make more informed decisions that drive growth and profitability. You’ll be able to identify areas of improvement, optimise resources, and create targeted strategies to reach your target audience.

With XAI, you can gain data insights: Explainable AI helps you understand how your data is being used to make predictions, allowing you to identify biases and inaccuracies. This leads to more accurate forecasting and better strategic planning.

You can streamline decision-making: By providing transparent and interpretable results, XAI enables you to make swift and informed decisions, reducing the risk of costly mistakes.

You can develop targeted strategies: With XAI, you can create tailored marketing campaigns and product development strategies that resonate with your target audience, driving revenue and growth.

Enhancing Operational Efficiency

How can explainable AI optimise your small business operations, freeing up resources and reducing inefficiencies that drain your bottom line?

By integrating explainable AI (XAI) into your operations, you can automate tedious tasks, streamline processes, and gain valuable insights into your supply chain.

XAI can identify bottlenecks in your process, enabling you to make data-driven decisions to improve efficiency. For instance, XAI can analyse your supply chain data to predict demand, optimise inventory, and reduce lead times. This allows you to respond quickly to changes in the market, ensuring you stay competitive.

Process automation is another area where XAI can have a significant impact.

By automating repetitive tasks, you can free up staff to focus on higher-value tasks, such as strategy and innovation. XAI can also help you identify areas where automation can have the greatest impact, ensuring you’re maximising your ROI.

With XAI, you’ll have the transparency and trust you need to make informed decisions, freeing you to focus on what matters most – growing your business and achieving your goals.

Building Trust With Transparent Models

As you explore the concept of building trust with transparent models, you’ll want to focus on three key aspects:

Model interpretability, which involves understanding how your model is making predictions;

Algorithmic decision-making, which examines the logic behind the model’s choices;

Model explainability metrics, which provide quantitative measures of your model’s transparency.

Model Interpretability

Model interpretability, a crucial aspect of trustworthy AI, enables you to peek under the hood of complex algorithms, deciphering the decision-making process and fostering a deeper understanding of your models’ inner workings.

By making your models more transparent, you can identify biases, errors, and areas for improvement, ultimately leading to better decision-making and more accurate predictions.

To achieve model interpretability, you can employ various techniques, including:

-

Feature attribution: This involves analysing how specific input features contribute to the model’s predictions, allowing you to identify the most important factors driving the model’s decisions.

-

Model introspection: This technique involves analysing the model’s internal workings, such as the activation patterns of neurones in neural networks, to gain insights into its decision-making process.

-

Partial dependance plots: These plots help visualise the relationship between specific input features and the model’s predictions, providing a clearer understanding of how the model responds to different inputs.

Algorithmic Decision-Making

By designing models that provide transparent decision-making processes, you can build trust with stakeholders and guaranty that your algorithmic systems are fair, reliable, and accountable.

This transparency is vital in making certain that your AI systems are alined with your business values and goals.

As a small business owner, you must verify that your algorithmic decision-making processes are explainable, auditable, and compliant with regulatory frameworks.

This involves implementing human oversight mechanisms to detect and prevent biases, errors, and unfair outcomes.

By doing so, you can demonstrate accountability and build trust with your customers, partners, and regulatory bodies.

In addition, transparent decision-making processes enable you to identify areas for improvement, refine your models, and optimise your business operations.

Model Explainability Metrics

You need to quantify the explainability of your AI models using metrics that accurately measure the transparency and accountability of your algorithmic decision-making processes. This is essential in building trust with your customers and stakeholders, as well as ensuring compliance with regulatory requirements.

To achieve this, you must select the right metrics for Model Explainability.

Model Fidelity: This metric evaluates how well your model’s explanations aline with its actual behaviour. High fidelity indicates that your model is transparent and reliable.

Metric Selection: Choose metrics that are relevant to your specific use case and business goals. This may include metrics such as feature importance, partial dependance plots, or SHAP values.

Explainability-Accuracy Trade-off: Be aware of the trade-off between model explainability and accuracy. While high explainability is desirable, it may come at the cost of reduced accuracy.

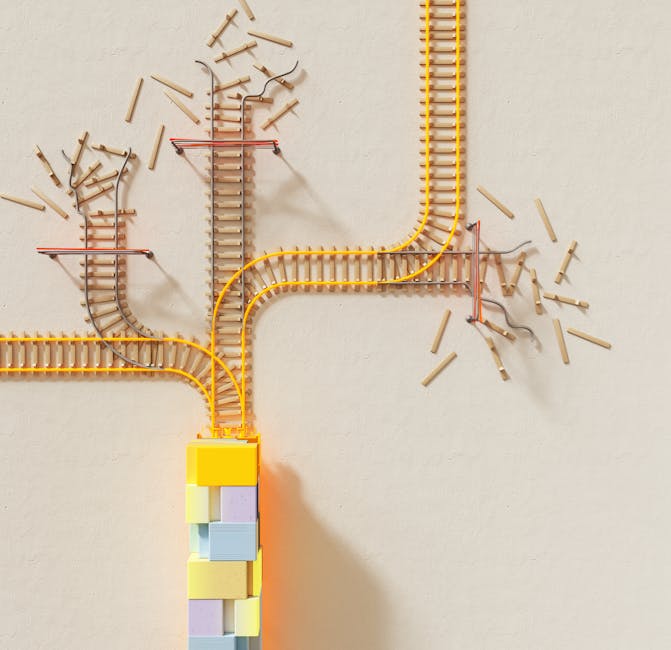

From Black Box to Glass Box

As AI systems increasingly influence critical decision-making, the imperative to transform their opaque ‘black box‘ nature into transparent ‘glass boxes‘ has become paramount.

You, as a small business owner, need to understand how AI-driven decisions are made, especially when they impact your customers, revenue, and reputation. The AI evolution has reached an inflexion point where tech transparency is no longer a luxury, but a necessity.

The black box conundrum arises from the complexity of AI models, which often involve intricate algorithms, neural networks, and vast datasets. This opacity can lead to biassed or discriminatory outcomes, undermining trust in AI-driven decisions.

To overcome this, you need to demand explainable AI that provides insights into the decision-making process.

The shift from black box to glass box requires a fundamental transformation in AI design. You should expect AI systems to provide transparent, interpretable, and reproducible results.

This can be achieved through techniques like model explainability, feature attribution, and visual analytics. By making AI more transparent, you can identify biases, errors, and areas for improvement, ultimately leading to more informed decision-making.

As you venture forth on this journey, remember that tech transparency is a critical component of trustworthy AI, and prioritising transparency is vital in your small business applications.

Ensuring Accountability in AI

Twenty AI accidents in the past decade have highlighted the need for accountability mechanisms to prevent and mitigate AI-driven harm. As you integrate AI into your small business, it’s vital to make transparent your AI’s decision-making processes and accountable for any consequences that may arise.

You must establish clear lines of accountability to avoid blame-shifting and guaranty that someone is responsible for AI-driven harm.

This can be achieved by implementing AI governance frameworks that outline roles, responsibilities, and decision-making processes.

Establishing clear regulatory compliance protocols to confirm adherence to existing laws and regulations.

Developing incident response plans to quickly respond to AI-driven harm and minimise its impact.

Conclusion

As you gaze into the crystal ball of AI, you see a reflection of transparency and trust.

Explainable AI shines a light on the once-murky waters of decision-making, illuminating hidden biases and errors.

Like a masterful conductor, XAI harmonises the symphony of data, ensuring accountability in every note.

With transparent models, the veil of mystery lifts, and the black box transforms into a glass box, radiating clarity and confidence.

Contact us to discuss our services now!